Scroll down for Interactive Code Environment 👇

Welcome to the first chapter of my Deep Learning Foundations series. Today, I’m excited to share my insights into matrix multiplication, an essential piece in the puzzle of neural networks. For those stepping into the vast world of deep learning, understanding this key concept of linear algebra is a game-changer.

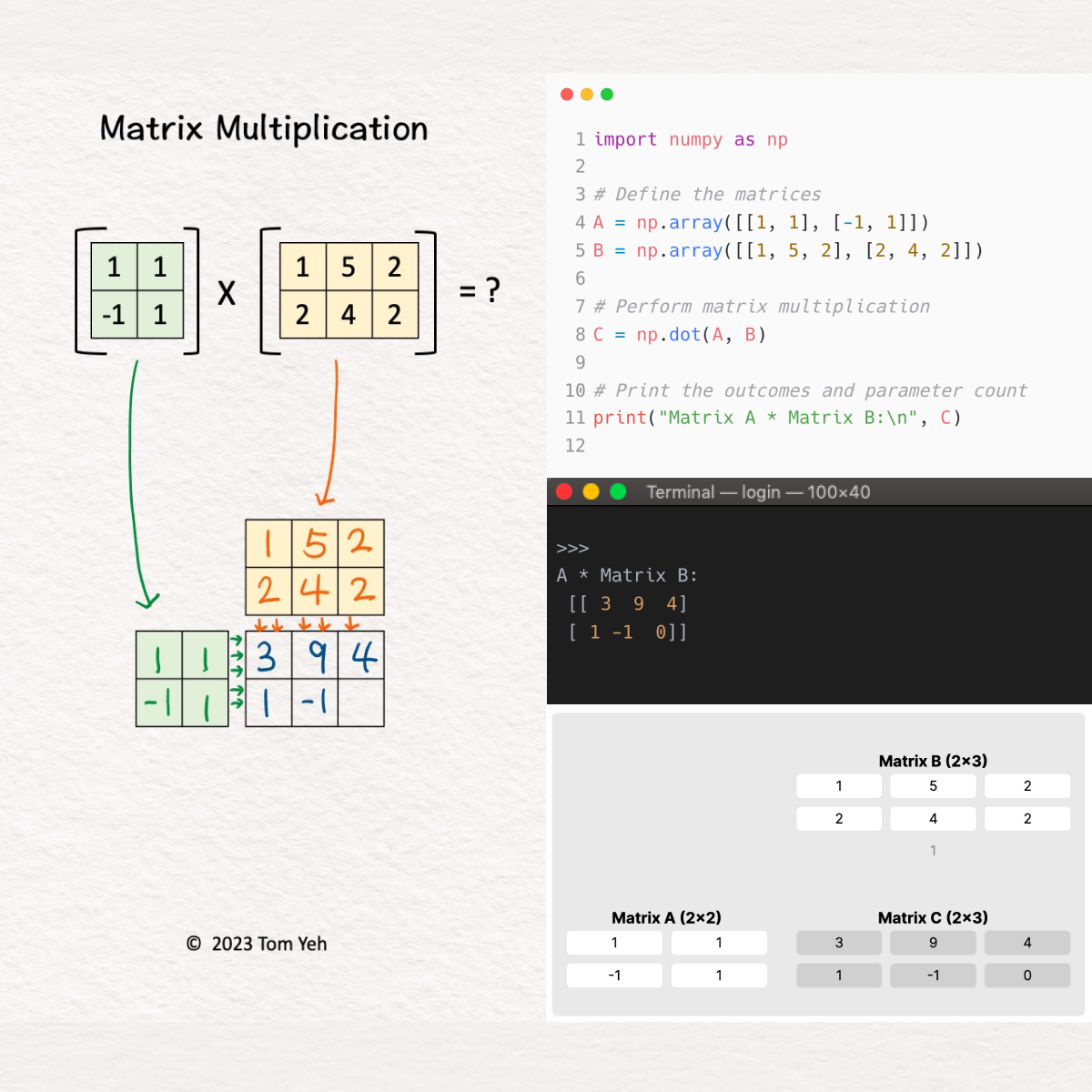

Matrix Multiplication:

- Dimensions and Scaling: Discover how matrix dimensions interact and the importance of scaling in my explorations.

- Dot Product Basics: Delve into my journey of understanding dot products and their role in merging matrix rows and columns, pivotal for crafting neural network structures.

Matrix multiplication is the powerhouse that drives the deep learning engine, facilitating the seamless execution of complex neural network tasks with remarkable efficiency.

Despite its critical role, I’ve often found myself and others hesitating when faced with matrix multiplication. This prompted me to develop a simple, yet effective approach to tackle matrix multiplications by hand.

Let’s consider multiplying matrices A and B to get matrix C (A x B = C). My method sheds light on several aspects:

💡 Dimensions: The size of C is a mix of A’s rows and B’s columns, offering a straightforward visualization of the outcome’s dimensions.

💡 Scalability: Adjusting the size of A, B, or C showcases the flexible nature of matrices, ensuring dimensions stay compatible throughout operations.

💡 Row vs. Column Vectors: The computation behind each element in C involves a dot product between a row from A (in green) and a column from B (in yellow), clarifying the calculation process.

💡 Stackability: This approach’s efficiency enables visualizing consecutive matrix multiplication operations, invaluable for understanding the workings of complex neural networks like multi-layer perceptrons. This clarity enhances the grasp of deep learning architecture fundamentals.

Through demystifying matrix multiplication, I’ve laid the foundation for a deeper dive into deep learning mechanics. Stick around for more discoveries in this Deep Learning Foundations series.

Walking Through the Code:

-

NumPy, My First Ally:

- The adventure begins by bringing in NumPy, a library that supercharges Python with a high-performance array object and tools for array manipulation. It’s my go-to for numerical computations, especially when dealing with matrices.

-

Crafting the Matrices:

Aemerges as a 2x2 matrix, arrayed within arrays, where each nested array is a row. Specifically,Ais composed of[1, 1]and[-1, 1].Btakes form as a 2x3 matrix, similarly arrayed. It unfolds into two rows:[1, 5, 2]and[2, 4, 2], each stretching across three columns.

-

The Heart of Multiplication:

- Employing

np.dot, I marryAandB. The offspring, matrixC, embodies the multiplication efforts. In this dance,A’s columns andB’s rows must mirror each other in number, enabling their union. Here,A’s two columns perfectly complementB’s two rows. Cinherits dimensions from the outer realms ofAandB. WithAas a 2x2 andBas a 2x3,Cproudly stands as a 2x3 matrix.

- Employing

-

Revealing the Magic:

- The finale involves unveiling

C’s essence, showcasing the transformative power of matrix multiplication on the initial matrices.

- The finale involves unveiling

By dissecting this example, I aim to illuminate the path of matrix multiplication, highlighting its critical role in both linear algebra and the deeper realms of deep learning. With NumPy’s elegant syntax and potent functionality, embarking on complex mathematical adventures becomes not just feasible, but exhilarating, paving the way for advancements in deep learning.

Interactive Code Environment

Original Inspiration

The spark for this series was ignited by the innovative exercises developed by Dr. Tom Yeh at the University of Colorado Boulder. His commitment to hands-on education resonates deeply with me, especially in a field as dynamic and critical as deep learning. Facing a lack of practical learning resources, Dr. Yeh crafted a comprehensive suite of exercises, beginning with fundamental concepts like matrix multiplication and extending to the intricacies of advanced neural architectures. His efforts have not only enriched the learning experience for his students but also inspired me to delve deeper into the foundational elements of AI and share these discoveries with you.

Wrapping Up and Looking Ahead

Diving into matrix multiplication has been about more than just numbers and operations; it’s a gateway into the intricate world of deep learning. By breaking down this fundamental process, we’ve started building a solid base, one that’s essential for anyone looking to navigate through the complexities of neural networks. The journey we embarked on today is just the beginning. In my upcoming posts, I’ll expand on these basics, exploring how these core principles apply to more complex neural network structures. Keep an eye out for my next piece, where we’ll transition from the theoretical groundwork to the practical application of these concepts in creating more sophisticated models. And, as always, I look forward to sharing insights, sparking discussions, and growing together in this fascinating exploration of AI. Check out my LinkedIn posts where we can continue the conversation and exchange ideas.

- Next Post: Understanding Single Neuron Networks

About Jeremy London

Jeremy from Denver: AI/ML engineer with Startup & Lockheed Martin experience, passionate about LLMs & cloud tech. Loves cooking, guitar, & snowboarding. Full Stack AI Engineer by day, Python enthusiast by night. Expert in MLOps, Kubernetes, committed to ethical AI.